Statistical Significance in Split Testing

Statistical Significance in Split Testing

In Marketing, we LOVE split testing — and for good reason — it’s a fantastic method of optimizing a campaign for the best conversion rates and ultimately biggest profit margins.

However, one very important thing to keep in mind when performing these split testing experiments is the “statistical significance” of the results from a split test. For those of you who’s eyes just glazed over when I dropped the word statistics… I’ll try to keep this topic high-touch and easy to digest.

If you look up the wiki on statistical significance, you’ll find that we’re essentially attempting to predict if the results we get from a split test are meaningful and repeatable.

Why is this important?

Let’s imagine that we are attempting to test the effectiveness of 2 headline options on the sales page for our next launch. We want to figure out if headline “A” or headline “B” is producing more sales.

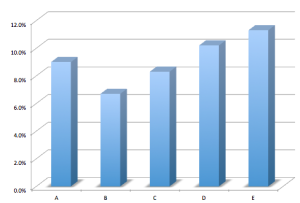

Here are the theoretical results after sending 1100 visitors to both sales pages:

Example #1

Headline “A”

# of visitors: 1100

conversion 5%

Headline “B”

# of visitors: 1100

conversion 9%

Using a Statistical Difference calculator, we can determine that the difference is 4%, with a comparative error of 2.1. Don’t worry if you don’t understand the math behind it, just be aware that a higher comparative error is bad!

The part you should care about is that the answer is “YES“, there is a statistical difference. We have statistically proven that Headline “B” is converting better, so we’ll go ahead and run all of our advertising dollars towards Headline “B”.

Now what happens if we reduce the traffic to the site during the test? Can we still be sure that Headline “B” is better? Let’s suppose that we were only able to drive 200 visitors to each test. Are our results still valid?

Example #2

Headline “A”

# of visitors: 200

conversion 5%

Headline “B”

# of visitors: 200

conversion 9%

Checking the calculator again, we now see that the comparative error has jumped up from 2.1 to 5 (remember, higher is worse!). And the result is that there is NOT a statistical difference between our results.

What does this mean in practical terms?

Say we have $10,000 to spend on advertising for this campaign. From our quick calculations, we have proven that the results from Example 2 could be a total fluke. We didn’t have enough visitors to the site to validate that the results are meaningful! So now we really DON’T know what headline should yield higher results without sending more traffic to verify. Better do some more testing!

Have you ever run a split test with interesting results? Post your story below in the comments.

I love testing, even when I have a smaller segment or list because it keeps me thinking “what could I do better” but you’re absolutely right, the significance of that 1% is hampered if there’s not a big enough sample size. Solution: get a bigger reach!

I love testing, even when I have a smaller segment or list because it keeps me thinking “what could I do better” but you’re absolutely right, the significance of that 1% is hampered if there’s not a big enough sample size. Solution: get a bigger reach!

Jeez. This makes you realize which numbers are actually important. People get so caught up in tracking everything that they miss the forest for the trees.

Jeez. This makes you realize which numbers are actually important. People get so caught up in tracking everything that they miss the forest for the trees.